Banning TikTok Is The Right Call

… but not because of Chinese surveillance

What does Section 230 of the Communications Decency Act have in common with TikTok?

They are both allegedly designed to benefit and protect children, but end up harming them greatly.

The Communications Decency Act was adopted in 1996 to protect children from unrestricted access to internet pornography. At the time, politicians thought of online platforms as digital newspapers and could not possibly have foreseen the hyper-stimulating, personalized, algorithmically curated and omnipresent social media experience that has become integral to modern life today. Section 230 (c) (1) in particular, has been called “the twenty six words that created the internet” although the policymakers adopting it, did so as an afterthought, a detail that was part of a much larger legal framework. No one intentioned or foresaw that Section 230 (c) (1) would end up as an accountability sink for BigTech social media, absolving them for any social and legal responsibility related to their platforms. Next week, we will take a closer look at Section 230’s impact, and explain why and how it should be reformed, using TikTok as one case example.

TikTok’s gamified design is marketed and indeed appeals the most to children and teens. Measured on daily average usage time, TikTok is the most successful social media app in the world, ever. The revenue of TikTok’s parent company ByteDance recently surpassed that of Meta (despite ByteDance having a market cap valued less than one-fifth of Meta). However, underlying TikTok’s commercial success story hides a darker story about technology addictiveness and algorithmic harm. The second story will be the topic of this week’s post. As a courtesy to free readers, I will write this post backwardly, starting with my conclusion.

On September 17, Trump is expected to grant TikTok another extension for finding an American buyer or face a potential ban in the US. (Update: Treasury Secretary Scott Bessent said yesterday that a framework deal with China has been reached and is expected to be finalized this Friday). I personally think a TikTok ban would be a net benefit to society. And it has nothing to do with geopolitics, or the considerable privacy and security concerns associated with TikTok’s Chinese ownership.

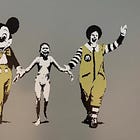

On the positive side, TikTok has provided independent creators with new opportunities for exposure and revenue. Which is great! However, the negative implications of TikTok outweigh these benefits. TikTok is dangerous to use for its prime audience - children - and it has a cancerous impact on collective society. It can surely not be a cosmic coincidence that the app is banned in China where it’s from.

The dangers and harms of TikTok is driven by its For Your Page (FYP) algorithm which has a very unfortunate tendency to lead users into so called “rabbit holes”. You can find a report by Amnesty International about the topic here. I also recommend checking out Gurwinder’s post “TikTok is a Time Bomb” which was a major inspiration for myself. Unless TikTok reforms its algorithm, offer users more control of their experience within the app, public authorities and independent experts more transparency about how it works, and implement a reliable mechanism for age-verification, I don’t see any constructive path forward for TikTok that is beneficial to societies.

The paid analysis below is an excerpt from a draft of my upcoming book that will offer more insights on the harms of TikTok.