Potential Landmark Case on Social Media's Destruction of Youth Mental Health

The multi-state class action against Meta, social media's harmful effects on the youth, manipulative product features, and Meta's denial of key findings in internal research.

Introduction

In the presentation below, Jonathan Haidt presents the research findings from his upcoming book, The Anxious Generation: How the Great Rewiring of Childhood is Causing an Epidemic of Mental Illness, also reported on his Substack After Babel .

I highly recommend watching this video, if not now, I suggest bookmarking it for later.

I believe Haidt's warning to teachers, education administrators, parents, and the public is timely and will age well in the years ahead. The negative impacts of smartphones and social media, especially on young minds, are profoundly devastating and hard to ignore at this point.

Most of us have become familiar with the darker sides of social media business, for example through a popular resource like the Netflix documentary, The Social Dilemma from 2020. Yet, very little has been done to change the status quo. That is the issue we will take a deep dive on today.

The Multi-State Class-Action Against Meta Platforms Inc.

I finally got around to reading the 233-page class-action complaint against Meta Platforms Inc., filed and signed by 33 US state attorneys general on October 24, 2023 (the full complaint here). The multi-state lawsuit, which is led by California and Colorado, accuses Meta of offering digital services with harmful features to kids and teenagers, while the company was publicly downplaying, rejecting, or ignoring these harmful effects.

In addition to the multi-state class-action lawsuit, eight state attorneys general and the District of Columbia filed separate lawsuits in their own state courts on October 24, which means that Meta was sued in 42 states on the same day.

The lawsuits came after investigations by the attorneys general that spanned nearly two years. Facebook came under the regulatory limelight after internal documents were leaked to the U.S. Securities and Exchange Commission by whistleblower Francis Haugen. The documents were a part of the so-called “Facebook Papers” or the “Facebook files” as they were dubbed by the press.

Among many other compromising findings, the leaked documents contained a slide presentation posted to Facebook’s internal messaging board. The presentation revealed that “thirty-two percent of teen girls said that when they felt bad about their bodies, Instagram made them feel worse (..)” and concluded that “comparisons on Instagram can change how young women view and describe themselves.” Another internal slide showed that “among teens who reported suicidal thoughts, 13% of British users and 6% of American users traced the desire to kill themselves to Instagram”.

Findings like these have for many years consistently been denied by Meta. For example, at a congressional hearing on 25 March, 2021, Meta CEO Mark Zuckerberg was asked about social media’s harm to young people’s mental health, whereto he replied:

“Overall, the research that we've seen is that using social apps to connect with other people can have positive mental health benefits and well-being benefits by helping people feel more connected and less lonely.”

Unfortunately, many passages in the multi-state complaint are censored because Meta's internal research is proprietary. However, from the parts that are public, we learn plenty about the clever tactics Meta uses to capture, retain, and manipulate the brain chemistry of its users. As we know, copies and deviations of these tactics are now used by many other social platforms and apps, notoriously with great success by TikTok.

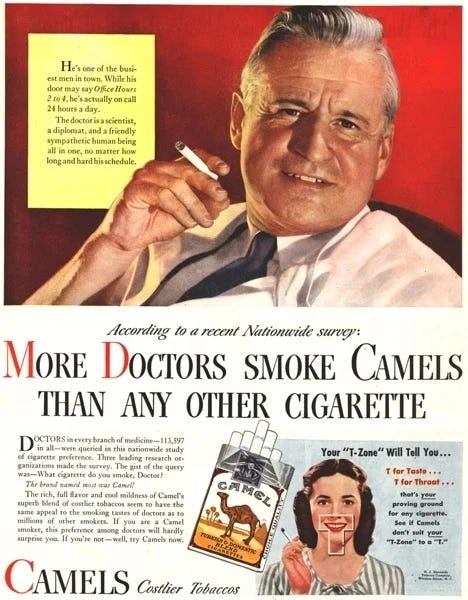

The lawsuit can by analogy be compared to an impactful report published by the US Surgeon General in 1964 that summarized the existing body of evidence for tobacco smoking’s strong link with lung cancer. The report led to new policies and a new awareness of cigarette smoking’s negative health effects, although it came many years after the first major study on the subject in 1950. Throughout the 1930s and 1940s cigarette companies promoted ads that presented smoking as something cool and healthy.

However, the analogy between “the profits-over-public health” attitude carried by contemporary big tech companies and that of the big tobacco companies in the previous century is not perfect. After reading through the multi-state complaint, it’s clear to me that Meta has acted orders of magnitude more dangerous, reckless, and dishonest than the big tobacco companies could ever do.

The link between social media use and declining mental health for kids and teens

If the US state attorneys general win in this case, it would not be the first time that a court has ruled that social media platforms can exacerbate mental health conditions. In October 2022, a court in the UK ruled that Instagram directly contributed to the suicide of a 14-year-old girl, Molly.

No penalty was imposed on Instagram at the time because the decision was neither part of a civil nor a criminal trial but a coroner inquest, an investigation carried out by a public official to determine the facts around and the cause of death.

Molly was outwardly a normal, well-functioning young girl but had a secret online existence that her parents didn’t know about. After the tragic incident occurred, Molly’s father gained access to her Instagram account where he found a folder called “Unimportant things” with dozens of troubling images and quotes. As a part of the investigations, Meta reluctantly agreed to hand over more than 16,000 pages from Molly’s Instagram account which took a law firm involved in the case more than 1,000 hours to review.

In the six months leading up to her death, Molly shared, liked, or saved 16,300 posts on Instagram, or almost 50 posts per day. This number speaks to how much time she must have spent on the platform, for the most part outside of her parents’ control or knowledge.

Out of the 16,3000 posts Molly liked, shared, or saved, 2,100 were related to suicide, self-harm, and depression. Many of the posts glorified “quiet suffering”, hiding emotional distress and inner struggles while putting up a façade for other people to give the impression that everything is fine.

How bad was the content Molly viewed in the months leading up to her trading death, exactly? As reported by the NY Times:

“Molly’s social media use included material so upsetting that one courtroom worker stepped out of the room to avoid viewing a series of Instagram videos depicting suicide. A child psychologist who was called as an expert witness said the material was so “disturbing” and “distressing” that it caused him to lose sleep for weeks.”

The case is a heart-breaking but unfortunately, as we shall learn in the next session, not a surprising example of how Meta’s business model can push young kids and teens in particular down toxic rabbit holes that are difficult to escape.

Meta’s Business Model