Meta Called OpenAI’s Bluff & Open-Access AI Is Winning

The safety concern and business opportunity of open-access AI

While OpenAI teased SearchGPT and is probably holding hands in a circle right now and praying to AGI, Mark Zuckerberg showed a real sense of business acumen by releasing Meta’s new Llama 3.1 models with open weights under the spiritual leadership of Meta’s AI Chief, Yann LeCun.

We already knew that Meta would continue the open approach from previous releases of its AI model, Llama. This much was set in stone by Zuckerberg’s comments during Meta's Q4 23 earnings calls. It’s more surprising that the largest version of Llama 3.1 (405B) seems to closely match, and on some benchmarks exceed, the capabilities of leading closed AI models such as GPT-4.0, Claude 3.5 Sonnet, and Google Gemini 1.5.

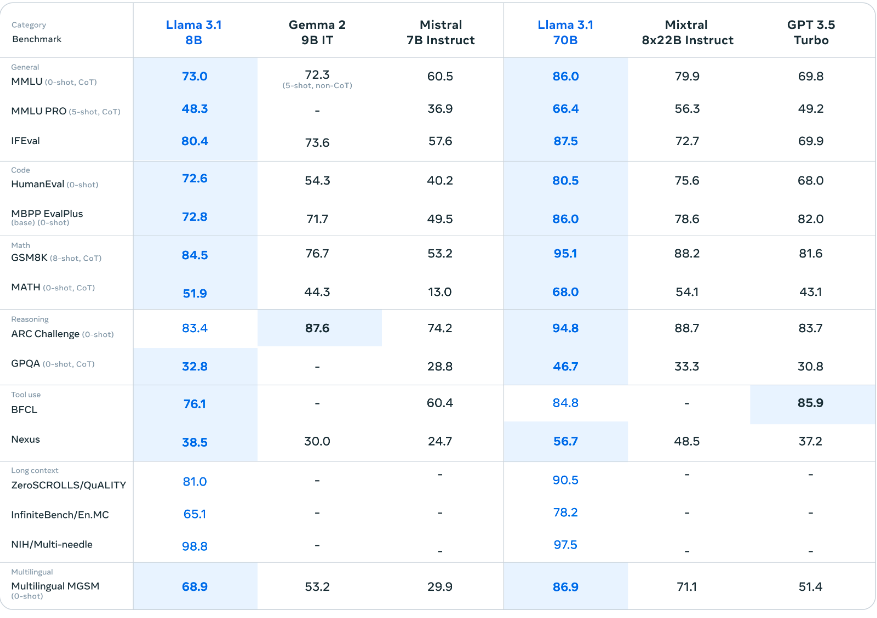

The smaller versions of Llama 3.1 (8B and 70B) lead against both closed and open models of comparable sizes.

In this post, we will look at the sensibility of the open-access approach from both a safety and business perspective.

The Safety Concern

Former OpenAI employee and author of the “Situational Awareness” manifesto, Leopold Aschenbrenner, said he was losing sleep over the thought of the Chinese Communist Party (CCP) stealing the model weights and algorithmic secrets behind OpenAI’s models.

Aschenbrenner wrote in his manifesto that “the algorithmic secrets we are developing, right now, are literally the nation’s most important national defense secrets”. This rather disconcerting viewpoint is presumably shared by members of OpenAI’s staff and many insiders in Silicon Valley.

(I gave Aschenbrenner’s manifesto the full Futuristic Lawyer treatment in a post from June on Michael Spencer’s publication, AI Supremacy. I expect to publish it on here at some point in an updated version.)

As I see it, Meta has called OpenAI’s bluff by releasing a model as powerful as Llama 3.1 with open weights and revealing information about its architecture and training process. Meta is indirectly communicating that the greatest danger of AI is not a superintelligent entity going rogue and eradicating humans in an effort to produce more paper clips. The pressing AI safety issues of our time are much more mundane = humans using AI models with malicious intent.

In Meta’s press release “Open Source AI Is the Path Forward”, Zuckerberg frames this as a distinction between “intentional” and “unintentional harm”

“Unintentional harm is when an AI system may cause harm even when it was not the intent of those running it to do so. For example, modern AI models may inadvertently give bad health advice. Or, in more futuristic scenarios, some worry that models may unintentionally self-replicate or hyper-optimize goals to the detriment of humanity. Intentional harm is when a bad actor uses an AI model with the goal of causing harm.”

The greatest danger of AI, as I see it, is not unintentional nor intentional harm but rather accelerating systemic inequality. For this reason alone, it makes a lot of sense to develop AI in the open, instead of leaving the development to OpenAI (pun intended).

Zuckerberg writes:

“It seems most likely that a world of only closed models results in a small number of big companies plus our geopolitical adversaries having access to leading models, while startups, universities, and small businesses miss out on opportunities.”

With open-access AI, organizations can host and run a powerful foundation model locally, fine-tune the model with proprietary data, customize it for a specific application, or train a whole new model based on the foundation model’s output. The advantages of self-hosting AI models summed up in a few words are insurance of privacy, freedom of dependency, and cost-efficiency at scale.

On a grander scale, if a few companies own and control the entire AI ecosystem, it will reflect the language, culture, values, and biases of its creators, typically the demographic of American, middle-aged, white, upper-class males. Open-access AI enables countries, organizations, and individuals to better reflect their local language and cultural heritage in the models’ outputs.

The main argument against providing open access to AI is that the technology can be misused by bad actors to produce large amounts of spam and slop, misinformation campaigns, deepfakes, sexually explicit material, and in the more futuristic department, invent new cyber-, chemical- or biological weapons.

The counterargument is in Zuckerberg’s words:

“(..) open source should be significantly safer since the systems are more transparent and can be widely scrutinized. Historically, open source software has been more secure for this reason.”

The Meta AI team spends around 10 pages in its paper about Llama 3.1 accounting for safety considerations, precautions, and red-teaming. However, if a malicious actor wanted to circumvent guardrails and safety filters in Llama 3.1 to produce illegal content, they probably could. Meta’s response is that malicious actors will always exist, as criminals do in a society, but the harm they can do with state-of-the-art-models of today is relatively limited:

“Since the models are open, anyone is capable of testing for themselves as well. We must keep in mind that these models are trained by information that’s already on the internet, so the starting point when considering harm should be whether a model can facilitate more harm than information that can quickly be retrieved from Google or other search results. “

I can think of at least two more reasons, why the fears of CCP stealing OpenAI’s algorithmic secrets are overstated and should be treated either as a marketing stunt or as a fear of revealing a trade secret but disguised as sci-fi fan-fiction.

Firstly, OpenAI is not serious on security matters. The company suffered a security breach in December 2023 where hackers gained access to its internal messaging system. The incident was reported by the NY Times in July 2024.

As I addressed two months ago, OpenAI also disbanded its “Superalignment team” that worked with AI safety, it does not update its home-made risk framework although it said it would do so frequently, and it did not properly safety-test its newest model GPT-4o before release. Overall, OpenAI is not acting congruently with the belief that it's building an important military secret.

Meta diplomatically pokes at the hypocrisy in publicly worrying about product safety, while internally taking a laissez-faire stance towards security in the press release cited above:

“Our adversaries are great at espionage, stealing models that fit on a thumb drive is relatively easy, and most tech companies are far from operating in a way that would make this more difficult.”

Secondly, Chinese tech companies are already competitive or nearly competitive with American BigTech. For this reason, stealing OpenAI’s algorithmic secrets would not be of great benefit to China.

In January 2024, Kai-Fu Lee’s Beijing-based startup, 01.AI, released a top-performing open model, Yi-34B, that surpassed Llama 2. The Chinese tech giant Alibaba's model Qwen-72B-Instruct currently surpasses Llama 3 on the open LLM leaderboard by Hugging Face.

At the current moment, 01.AI’s Yi, Alibaba’s Qwen, as well as DeepSeek AI’s DeepSeek are lagging behind on the LMSYS Chatbot Arena Leaderboard, a crowdsourced open platform for LLM evaluations. But that could change in time. China is producing almost half of the world’s AI talent and its leading AI products are- like Llama - released in the open so the AI labs can quickly copy each other and make progress without the burdens of secrecy and intellectual property rights.