The Existential AI Threat & Game Theory

What is the most existential risk posed by AI?

“We appeal as human beings to human beings: Remember your humanity, and forget the rest. If you can do so, the way lies open to a new Paradise; if you cannot, there lies before you the risk of universal death.”

The Russell-Einstein Manifesto (1955)

The statement above was penned by the intellectual leader Bertrand Russell during the Cold War in 1955. In an open letter, Russell warned against the imminent threat and obliterating consequence of a nuclear war between the East and the West. Russell urged governments to find a peaceful resolution to all matters of dispute.

The plea was signed by 11 scientists, 9 of them Noble Prize winners, including Albert Einstein, who passed away just a few days after approving to give his signature. The letter became known as the “The Russell-Einstein Manifesto”.

Fast forward 68 years to May 2023. A large group of AI scientists and notable figures in the industry such as Geoffrey Hinton, Yoshua Bengio, Demis Hassabis, Sam Altman, and Dario Amodei signed an open letter crafted by the non—profit organization Center for AI Safety which simply states:

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

The letter doesn’t explain how and why poorly governed AI comes with “the risk of extinction”. That is up to the reader´s interpretation.

Since no one knows how AI technology will evolve - if the development will hit a rough patch soon and stall or continue to grow exponentially year by year - we can’t confidently say what the most serious societal-scale risk from AI is or how to prepare for it.

The mutually assured destruction that would follow from a nuclear war is frighteningly tangible, whereas the existential AI risk, is a different beast altogether. As I conceive the issue, it doesn’t boil down to a choice between paradise and universal death. The existential risk from AI is that a small percentage of the world’s population will sit on all the world’s resources, live in a material paradise, while the vast majority of people have to endure a financial hell.

To say it without the religious imagery, AI can supercharge systemic inequality and further widen the gap between rich and poor. Wealth accumulates to the richest, and whenever a new technology is not subject to democratic control but owned and controlled by the wealthy elite, it follows the Matthew Effect:

“For to every one who has will more be given, and he will have abundance; but from him who has not, even what he has will be taken away.”

It’s not only financially, that AI stands to benefit the billionaire class much more than ordinary Americans. While owners and investors of internet platforms stand to profit greatly from AI, the users’ attention spans are shortened and gradually molded into recurring revenue streams. The attention economy also has a strange but well-documented effect of promoting tribalism and division while benefitting the deep pockets of BigTech. Finally, the tremendous profits from the attention-based business model are not used to solve world hunger or build renewable energy systems, rather it is used to further expand the elite’s sphere of influence.

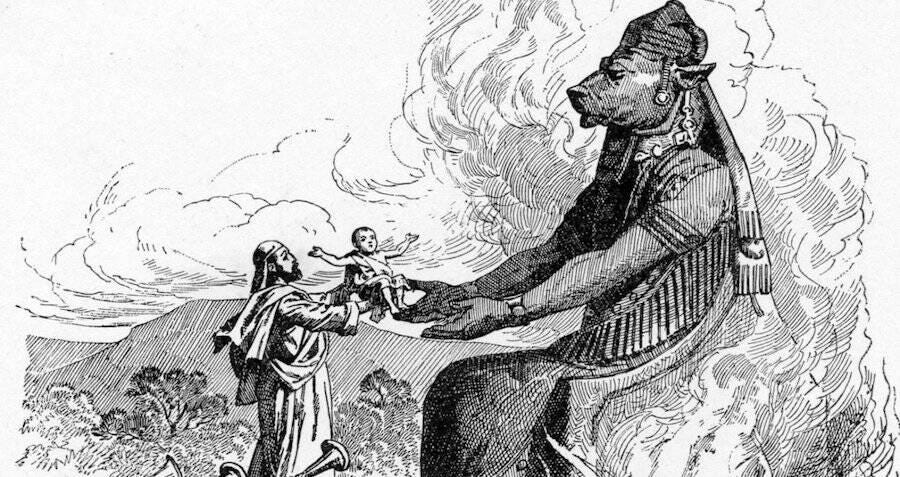

As we will look at below, all this is not the personal fault of billionaires, tech CEOs, or shareholders. Stereotypical “tech critics” often get this part wrong. It’s a failure of the market. The only real enemy is “Moloch”, a phenomenon originally described in the classical essay “Meditation on Moloch” by Scott Alexander.